In 1931 Kurt Gödel proved that any consistent symbolic language system rich enough to express the principles of arithmetic would include statements that can be neither proven nor disproven within the system. A necessary implication is that in such systems, there are infinitely many true statements that cannot (within that system) be proven to be true, and infinitely many false statements that cannot (within that system) be proven to be false. Gödel’s achievement has sometimes been over-interpreted since–as grounds for radical skepticism about the existence of truth, for example–when really all it expressed were some limitations on the possibility of formally modeling a complete system of logic from which all mathematical truths would deductively flow. Gödel gives us no reason to be skeptical of truth; he gives us reason to be skeptical of the possibility of proof, even in a domain so rigorously logical as arithmetic. In so doing, he teaches us that–in mathematics at least–truth and proof are different things.

What is true for mathematics may be true for societies as well. The relationship between truth and proof is increasingly strained online, where we spend increasing portions of our lives. Finding the tools to extract reliable information from the firehose of our social media feeds is proving difficult. The latest concern is “deepfakes”: video content that takes identifiable faces and voices and either puts them on other people’s bodies or digitally renders fabricated behaviors for them. Deepfakes can make it seem as if well-known celebrities or random private individuals are appearing in hard-core pornography, or as if world leaders are saying or doing things they never actually said or did. A while ago, the urgent concern was fake followers: the prevalence of bots and stolen identities being used to artificially inflate follower and like counts on social media platforms like twitter, facebook, and instagram–often for a profit. Some worry that these and other features of online social media are symptoms of a post-truth world, where facts or objective reality simply do not matter. But to interpret this situation as one in which truth is meaningless is to make the same error made by those who would read Gödel’s incompleteness theorems as a license to embrace epistemic nihilism. Our problem is not one of truth, but one of proof. And the ultimate question we must grapple with is not whether truth matters, but to whom it matters, and whether those to whom truth matters can form a cohesive and efficacious political community.

The deepfakes problem, for example, does not suggest that truth is in some new form of danger. What it suggests is that one of the proof strategies that we had thought bound our political community together may no longer do so. After all, an uncritical reliance on video recordings as evidence of what has happened in the world is untenable if video of any possible scenario can be undetectably fabricated.

But this is not an entirely new problem. Video may be more reliable than other forms of evidence in some ways. But video has always proven different things to different people. Different observers, with different backgrounds and commitments, can and will justify different–even inconsistent–beliefs using identical evidence. Where one person sees racist cops beating a black man on the sidewalk, another person will see a dangerous criminal refusing to submit to lawful authority. These differences in the evaluation of evidence reveal deep and painful fissures in our political community, but they do not suggest that truth does not matter to that community–if anything, our intense reactions to episodes of disagreement suggest the opposite. As these episodes demonstrate, video was never truth, it has always been evidence, and evidence is, again, a component of proof. We have long understood this distinction, and should recognize its importance to the present perceived crisis.

What, after all, is the purpose of proof? One purpose–the purpose for which we often think of proof as being important–is that proof is how we acquire knowledge. If, as Plato argued, knowledge is justified true belief (or even if this is merely a necessary, albeit insufficient, basis for a claim to knowledge), proof may satisfy some need for justification. But that does not mean that justification can always be derived from truth. One can be a metaphysical realist–that is, believe that some objective reality exists independently of our minds–without holding any particular commitments regarding the nature of justified belief. Justification is, descriptively, whatever a rational agent will accept as a reason for belief. And in this view, proof is simply a tool for persuading rational agents to believe something.

Insofar as proof is thought to be a means to acquiring knowledge, the agent to be persuaded is often oneself. But this obscures the deeply interpersonal–indeed social–nature of proof and justification. When asking whether our beliefs are justified, we are really asking ourselves whether the reasons we can give for our beliefs are such as we would expect any rational agent to accept. We can thus understand the purpose of proof as the persuasion of others that our own beliefs are correct–something Socrates thought the orators and lawyers of Athens were particularly skilled at doing. As Socrates recognized, this understanding of proof clearly has no necessary relation to the concept of truth. It is, instead, consistent with an “argumentative theory” of rationality and justification. To be sure, we may have strong views about what rational agents ought to accept as a reason for belief–and like Socrates, we might wish to identify those normative constraints on justification with some notion of objective truth. But such constraints are contested, and socially contingent.

This may be why the second social media trend noted above–“fake followers”–is so troubling. The most socially contingent strategy we rely on to justify our beliefs is to adopt the observed beliefs of others in our community as our own. We often rely, in short, on social proof. This is something we apparently do from a very early age, and indeed, it would be difficult to obtain the knowledge needed to make our way through the world if we didn’t. When a child wants to know whether something is safe to eat, it is a useful strategy to see whether an adult will eat it. But what if we want to know whether a politician actually said something they were accused of saying on social media–or something a video posted online appears to show them saying? Does the fact that thousands of facebook accounts have “liked” the video justify any belief in what the politician did in fact say, one way or another?

Social proof has an appealingly democratic character, and it may be practically useful in many circumstances. But we should clearly recognize that the acceptance of a proposition as true by others in our community doesn’t have any necessary relation to actual truth. Your parents were wise to warn you that you shouldn’t jump off a cliff just because all your friends did it. We obviously cannot rely exclusively on social proof as a justification for belief. To this extent, as Ian Bogost put it, “all followers are fake followers.”

Still, the observation that social proof is an imperfect proxy for truth does not change the fact that it is–like the authority of video–something we have a defensible habit of relying on in (at least partially) justifying (at least some of) our beliefs. Moreover, social proof makes a particular kind of sense under a pragmatist approach to knowledge. As the pragmatists argued, the relationship between truth and proof is not a necessary one, because proof is ultimately not about truth; it is about communities. In Rorty’s words:

For the pragmatist … knowledge is, like truth, simply a compliment paid to the beliefs we think so well justified that, for the moment, further justification is not needed. An inquiry into the nature of knowledge can, on his view, only be a socio-historical account of how various people have tried to reach agreement on what to believe.

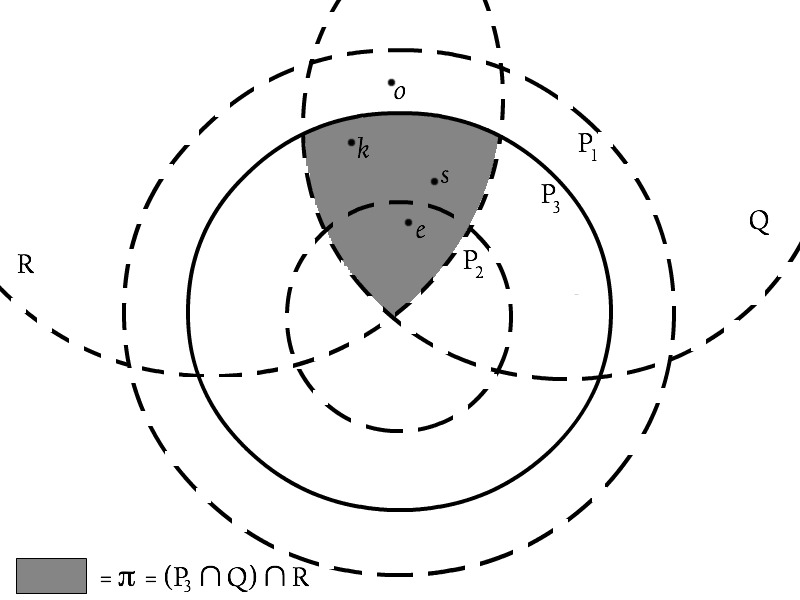

Whether we frame them in terms of Kuhnian paradigms or cultural cognition, we are all familiar with examples of different communities asserting or disputing truth on the basis of divergent or incompatible criteria of proof. Organs of the Catholic Church once held that the Earth is motionless and the sun moves around it–and banned books that argued the contrary–relying on the authority of Holy Scripture as proof. The contrary position that sparked the Galilean controversy–eppur si muove–was generated by a community that relied on visual observation of the celestial bodies as proof. Yet another community might hold that the question of which body moves and which stands still depends entirely on the identification of a frame of reference, without which the concept of motion is ill-defined.

For each of these communities, their beliefs were justified in the Jamesian sense that they “worked” to meet the needs of individuals in those communities at those times–at least until they didn’t. As particular forms of justification stop working for a community’s purposes, that community may fracture and reorganize around a new set of justifications and a new body of knowledge–hopefully but not necessarily closer to some objective notion of truth than the body of knowledge it left behind. Even if we think there is a truth to the matter–as one feels there must be in the context of the physical world–there are surely multiple epistemic criteria people might cite as justification for believing such a truth has been sufficiently identified to cease further inquiry, and those criteria might be more or less useful for particular purposes at particular times.

This is why the increasing unreliability of video evidence and social proof are so troubling in our own community, in our own time. These are criteria of justification that have up to now enjoyed (rightly or wrongly) wide acceptance in our political community. But when one form of justification ceases to be reliable, we must either discover new ones or fall back on others–and either way, these alternative proof strategies may not enjoy such wide acceptance in our community. The real danger posed by deepfakes is not that recorded truth will somehow get lost in the fever swamps of the Internet. The real danger posed by fake followers is not that half a million “likes” will turn a lie into the truth. The deep threat of these new phenomena is that they may undermine epistemic criteria that bind members of our community in common practices of justification, leaving only epistemic criteria that we do not all share.

This is particularly worrisome because quite often we think ourselves justified in believing what we wish to be true, to the extent we can persuade ourselves to do so. Confirmation bias and motivated reasoning significantly shape our actual practices of justification. We seek out and credit information that will confirm what we already believe, and avoid or discredit information that will refute our existing beliefs. We shape our beliefs around our visions of ourselves, and our perceived place in the world as we believe it should be. To the extent that members of a community do not all want to believe the same things, and cannot rely on shared modes of justification to constrain their tendency toward motivated reasoning, they may retreat into fractured networks of trust and affiliation that justify beliefs along ideological, religious, ethnic, or partisan lines. In such a world, justification may conceivably come to rest on the argument popularized by Richard Pryor and Groucho Marx: Who are you going to believe: me, or your lying eyes?

The danger of our present moment, in short, is that we will be frustrated in our efforts to reach agreement with our fellow citizens on what we ought to believe and why. This is not an epistemic crisis, it is a social one. We should not be misled into believing that the increased difficulty of justifying our beliefs to one another within our community somehow puts truth further out of our grasp. To do so would be to embrace the möbius strip of epistemology Orwell put in the mouths of his totalitarians:

Anything could be true. The so-called laws of nature were nonsense. The law of gravity was nonsense. “If I wished,” O’Brien had said, “I could float off this floor like a soap bubble.” Winston worked it out. “If he thinks he floats off the floor, and if I simultaneously think I see him do it, then the thing happens.” Suddenly, like a lump of submerged wreckage breaking the surface of water, the thought burst into his mind: “It doesn’t really happen. We imagine it. It is hallucination.” He pushed the thought under instantly. The fallacy was obvious. It presupposed that somewhere or other, outside oneself, there was a “real” world where “real” things happened. But how could there be such a world? What knowledge have we of anything, save through our own minds? All happenings are in the mind. Whatever happens in all minds, truly happens.

This kind of equation of enforced belief with truth can only hold up where–as in the ideal totalitarian state–justification is both socially uncontested and entirely a matter of motivated reasoning. Thankfully, that is not our world–nor do I belive it ever can truly be. To be sure, there are always those who will try to move us toward such a world for their own ends–undermining our ability to forge common grounds for belief by fraudulently muddying the correlations between the voices we trust and the world we observe. But there are also those who work very hard to expose such actions to the light of day, and to reveal the fabrications of evidence and manipulations of social proof that are currently the cause of so much concern. This is good and important work. It is the work of building a community around identifying and defending shared principles of proof. And I continue to believe that such work can be successful, if we each take up the responsibility of supporting and contributing to it. Again, this is not an epistemic issue, it is a social one.

The fact that this kind of work is necessary in our community and our time may be unwelcome, but it is not cause for panic. Our standards of justification–the things we will accept as proof–are within our control, so long as we identify and defend them, together. Those who would undermine these standards can only succeed if we despair of the possibility that we can, across our political community, come to agreement on what justifications are valid, and put beliefs thus justified into practice in the governance of our society. I believe we can do this, because I believe that there are more of us than there are of them–that there are more people of goodwill and reason than there are nihilist opportunists. If I am right, and if enough of us give enough of our efforts to defending the bonds of justification that allow us to agree on sufficient truths to organize ourselves towards a common purpose, we will have turned the totalitarian argument on its head. Orwell’s totalitarians were wrong about truth, but they may have been right about proof.